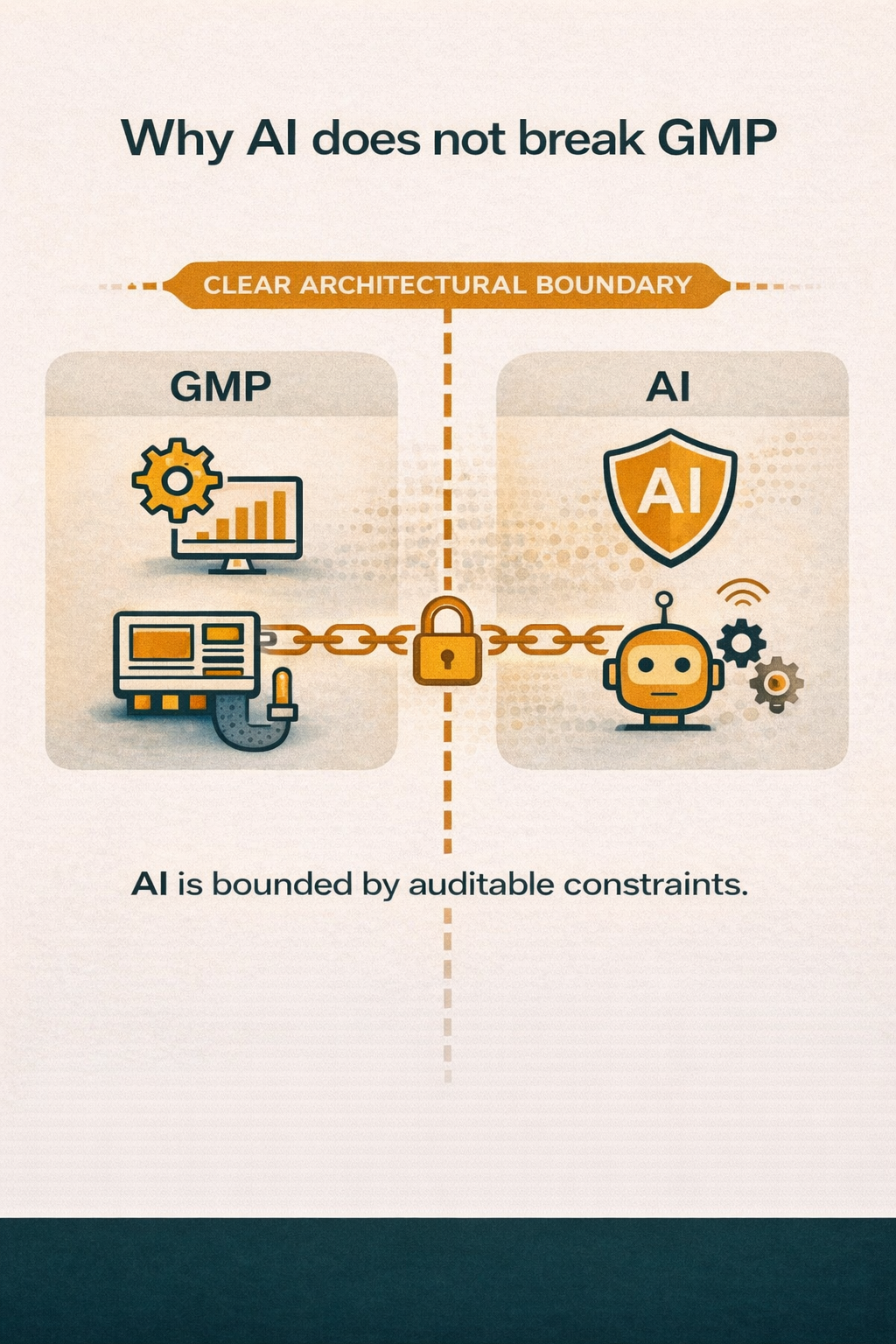

Why AI does not break GMP

AI and GMP are often framed as opposites. Adaptive versus deterministic. Fast versus controlled.

From inside real systems, this framing is misleading.

GMP does not break when AI appears. It breaks when architectural boundaries are unclear.

GMP exposes ambiguity early

GMP environments demand answers to simple questions:

What happened? When did it happen? Under which conditions? And who was responsible?

AI does not remove these questions. It makes them unavoidable.

Bounded influence is the key

AI works in GMP environments when its role is explicit.

Bounded influence. Observable behavior. Controlled change.

Often this means decision support before automation. Not because AI is weak, but because responsibility must remain traceable.

GMP requirements tighten with proximity to execution.

AI does not reduce responsibility. It increases it.